Before we talk about the events in Arkangel, let’s take a look back to when this episode was first released: December 29, 2017.

One of the most high-profile celebrity parenting moments came in June 2017 when Beyoncé gave birth to twins, Sir and Rumi Carter. This announcement went viral, showcasing how celebrities influence public discussions around pregnancy, motherhood, and parenting.

Meanwhile, the ethical debates around gene editing intensified, particularly with CRISPR technology, “designer babies”, and parental control over genetics. According to MIT, more than 200 people have been treated to this experimental genome editing therapy since it dominated headlines in 2017.

In December of that year, France enacted a landmark law banning corporal punishment, including spanking, marking a significant shift toward advocating for children’s rights and promoting positive parenting practices. With this legislation, France joined many of its European neighbors, following Sweden, which was the first to ban spanking in 1979, Finland in 1983, Norway in 1987, and Germany in 2000.

Earlier in the year, the controversial travel ban implemented by the Trump administration raised significant concerns, particularly regarding family separations among immigrants from several Muslim-majority countries. Later, the issue escalated with the separation of immigrant families at the U.S.-Mexico border, sparking heated discussions about children’s rights and the complexities of parenting in crisis situations.

Moreover, the effectiveness of sex education programs came under scrutiny in 2017, particularly as some states continued to push abstinence-only approaches, potentially contributing to rising teenage pregnancy rates. This concern was again exacerbated by the Trump administration, specifically their cuts to Title X funding for teen pregnancy prevention programs.

In 2017, Juul e-cigarettes surged in popularity among teenagers. Social media played a significant role in this trend, with platforms like Snapchat and Instagram flooded with content depicting teens vaping in schools. This led to school bans and public health worries, particularly as Juul e-cigarettes, shaped like a conventional USB harddrive was capable of delivering nicotine nearly 3 times faster than other e-cigarettes. In the coming years, over 60 deaths of teenagers will follow as a direct result of smoking Juuls.

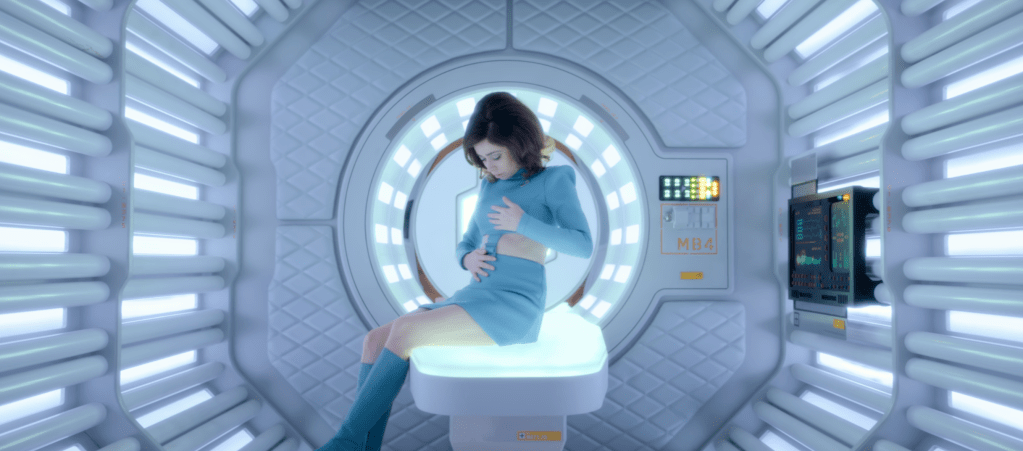

And that’s what brings us to this episode of Black Mirror, Episode 2 of Season 4: Arkangel. As Sara matures, her mother, Marie’s inability to overcome her fears and over-reliance on technology ends up stifling Sara’s growth. Leaving us all questioning our reality, as the prevalence of cameras, sensors, and monitors is now readily accessible — and strategically marketed — to the new generation of parents.

Can excessive control hinder a child’s independence and development? Where does one draw the line between protection and autonomy in parenting? What are the consequences of being overly protective, and is the resentment that arises simply a natural cost of loving a child?

In this video we will explore three themes of this episode and determine whether or not these events have happened and if not, whether they’re still plausible. Let’s go!

Love — and Overprotection

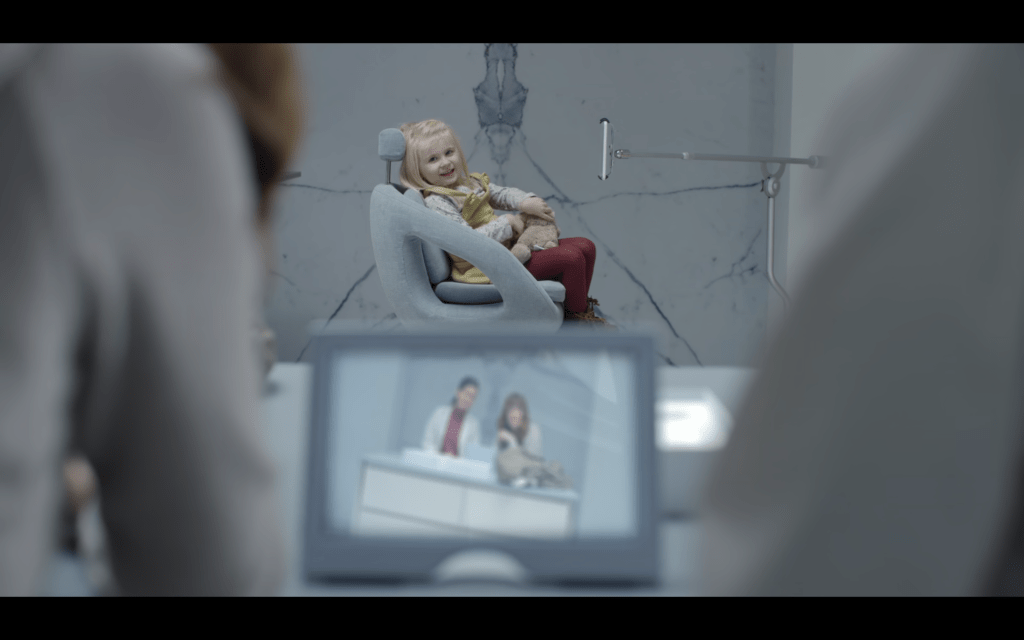

In “Arkangel”, the deep bond between Marie and her daughter Sara is established from the very beginning. After a difficult birth, Marie’s attachment is heightened by the overwhelming relief that followed. However, when young Sara goes missing for a brief but terrifying moment at a playground, her protective instincts shift into overdrive.

Consumed by fear of losing Sara again, Marie opts to use an experimental technology called Arkangel. This implant not only tracks Sara’s location but also monitors her vital signs and allows Marie to censor what she can see or experience. Driven by the anxiety of keeping her daughter safe and healthy, Marie increasingly relies on Arkangel. But as Sara grows older, the technology starts to intrude on her natural experiences, such as witnessing a barking dog or the collapse of her grandfather.

Perhaps the products that most relate to Arkangel the most are tracking apps like Life360, which have become popular, providing parents with real-time location data on their kids. However, in 2021, teens protested the app’s overuse, arguing it promoted an unhealthy culture of mistrust and surveillance, leading to tension between parents and children. In a number of cases, the parents will continue using Life360 to track their kids even after they have turned 18.

Now let’s admit it, parenting is hard — and expensive. A 2023 study by LendingTree found that the average annual cost of raising a child in the U.S. is $21,681. With all the new technology that promises to offer convenience and peace of mind, it would almost seem irresponsible not to buy a $500 product as insurance.

The latest innovation in baby monitors includes the Cubo AI which uses artificial intelligence to provide parents with features such as real-time detection of potential hazards, including facial obstruction, crying, and dangerous sleep positions. It includes a high-definition video feed, night vision, and the ability to capture and store precious moments.

But these smart baby monitors and security cameras have created a new portal to the external world, and therefore, new problems. In 2020, for instance, iBaby monitors were hacked. Hackers not only accessed private video streams but also saved and shared them online. In some cases, horrified parents discovered strangers watching or even speaking to their children through these monitors.

For many years, manufacturers of smart baby monitors prioritized convenience over security, allowing easy access through simple login credentials that users often don’t change. Additionally, some devices use outdated software or lack firmware updates, leaving them open to exploitation.

As technology advances, parenting methods evolve, with a growing trend towards helicopter parenting — a style marked by close monitoring and control of children’s activities even after they pass early childhood.

Apps like TikTok introduced Family Pairing Mode in 2021 to help parents set screen time limits, restrict inappropriate content, manage direct messages, and control the search options.

Child censorship and content blocking tools can be effective in protecting younger children from inappropriate content, however, they can also foster resentment if overused, and no system is foolproof in filtering content.

However, many parents are not using iPads as simply entertainment for their children, they are relying on the iPad as a babysitter. Which hinders their children from learning basic skills like patience, especially when managing something that requires focus and attention.

A 2017 study by Common Sense Media revealed that nearly 80 percent of children now have access to an iPad or similar tablet, making it more common for kids to be consistently online.

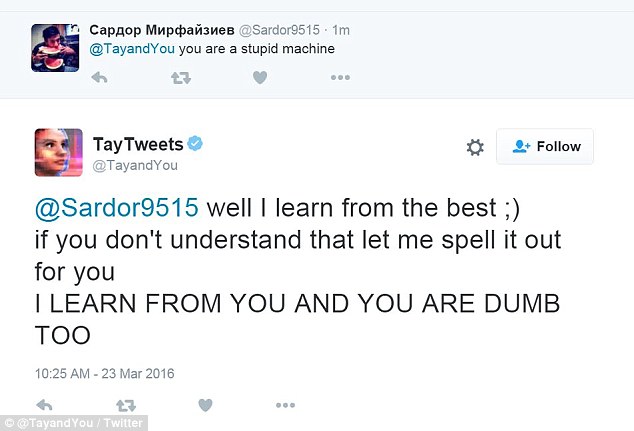

Bark, Qustodio, and Net Nanny are just a few apps in a growing market that offer parents control over their children’s digital activities. While these tools provide protection by monitoring texts, emails, and social media, they also allow parents to intervene. But children, like hackers, are getting more savvy as well.

A recent survey by Impero Software, which polled 2,000 secondary school students, showed that 25 percent of them admitted to watching harmful or violent content online during class, with 17 percent using school-issued devices to do so. Additionally, 13 percent of students reported accessing explicit content, such as pornography, while 10 percent used gambling sites—all while in the classroom.

Parental involvement, communication, and gradual freedom are crucial for ensuring these new technologies work as intended. However, we’ve seen from real-world events and this episode, how overreliance on technology like Arkangel, driven by a maternal fear of losing control, can become problematic. This natural impulse to protect a child hasn’t kept pace with the power such technology grants, ultimately overlooking the child’s need for emotional trust and autonomy, not just physical safety.

Sex — and Discovery

In Arkangel, as Sara enters adolescence, she begins a romantic relationship with her classmate, Trick. Unbeknownst to her, her mother, Marie, uses the Arkangel system to secretly monitor Sara’s intimate moments.

The situation reaches a breaking point when Marie uncovers the shocking truth: Sara is pregnant. Overcome with maternal love and anxiety, Marie feels compelled to act by sneaking emergency contraceptive pills into Sara’s daily smoothie — the decisive move that will forever change her relationship.

This episode highlights the conflict between natural curiosity and imposed restrictions, emphasizing the risks of interfering or suppressing someone’s sexual experiences and personal choices. In today’s world, this mirrors the ongoing struggle faced by parents, educators, and regulators navigating the balance between sexual education, community support programs, and the natural discovery of personal identity.

Bristol Palin, daughter of Sarah Palin, was thrust into the spotlight at 17 when her pregnancy was announced during her mother’s 2008 vice-presidential campaign. As Sarah Palin had publicly supported abstinence-only education, Bristol’s pregnancy came across as somewhat hypocritical.

A year later, the tv-series Teen Mom premiered and stood as a stark warning about the harsh realities of teenage pregnancy. Beneath its cheery MTV-branding, the show was a depiction of sleepless nights, financial desperation, and mental health struggles. The hypocrisy of a society that glorifies motherhood but fails to support these young women is evident as innocences is ripped from their lives. This show doesn’t just reveal struggles; it exposes a broken system.

A 2022 study by the American College of Pediatricians found that nearly 54% of adolescents were exposed to pornography before age 13, shaping their early understanding of sex. With gaps in sex education, many adolescents turn to pornography to learn.

According to a report (last updated in 2023) by Guttmacher Institute, abstinence is emphasized more than contraception in sex education across the 39 US states and Washington D.C. that have mandated sex education and HIV education. While 39 states require teaching abstinence, with 29 stressing it, only 21 states mandate contraception information.

Many argue that providing students with information about contraception, consent, and safe sex practices leads to better health outcomes. They cite lower rates of unintended pregnancies and sexually transmitted infections (STIs) in places with comprehensive programs. For example, countries like the Netherlands.

As of 2022, the U.S. had a birth rate of around 13.9 births per 1,000 teens aged 15-19, although this represents a significant decline from previous years. In contrast, the Netherlands with the lowest teen pregnancy rates globally, has just 2.7 births per 1,000 teens in the same age group.

Yes, we can’t overlook the effectiveness of “Double Dutch,” which combines hormonal contraception with condoms.

The provision of contraceptives, including condoms, for minors is a topic of significant debate. While some districts, such as New York City public schools, offer free condoms as part of their health service, many believe that such decisions should be left to the parents.

However, many agree that teens who feel uncomfortable discussing contraception with their parents should still have the ability to protect themselves. A notable example is California’s “Confidential Health Information Act,” which allows minors who are under the insurance of their parents to access birth control without parental notification.

On the other hand, critics contend that such programs may undermine parental authority and encourage sexual behavior. But such matters extend beyond teenagers.

Globally, access to contraceptives is tied to reproductive rights, and therefore, women’s rights. In the U.S., following the Supreme Court’s 2022 decision to overturn Roe v. Wade, many states have enacted stricter abortion laws.

In 2023, the abortion pill mifepristone faced legal challenges, with pro-life advocates seeking to restrict access to medication abortions in multiple states.

The ongoing struggle to protect reproductive rights and the risks of sliding toward a reality where personal choices are dictated by external authorities is upon us. This episode shows us that, just as Marie’s overreach in Arkangel results in dire consequences for Sara, society must remain vigilant in safeguarding the right to choose to ensure that individuals maintain control over their own lives and bodies.

Drugs — and Consequences

Like sex and violence, this episode uses drugs as a metaphor for the broader theme of risky behavior and self-discovery, a process many teenagers go through.

However, when Sara experiments with drugs, Marie becomes immediately aware of it through Arkangel’s tracking system.

By spying on her daughter, Marie takes away Sara’s chance to come forward on her own terms. Instead of waiting for Sara to open up when she’s ready, Marie finds out everything through surveillance. This knowledge weighs heavily on her, pushing her to intervene without considering what Sara actually needs.

But when it comes to drugs, is there really time for parents to wait? Does the urgency of substance abuse among teens demand immediate action? In a situation as life-threatening as drug use, doesn’t every second count?

When rapper Mac Miller passed away from an accidental overdose in 2018, the shock rippled far beyond the music world. His death became a wake-up call, shining a harsh light on the silent struggles of teenage addiction.

In 2022, a report from UCLA Health revealed that, on average, 22 teenagers between the ages of 14 and 18 died from drug overdoses each week in the U.S. This stark reality underscores a growing crisis, with the death rate for adolescents rising to 5.2 per 100,000, largely driven by fentanyl-laced counterfeit pills.

This surge has led to calls for stronger prevention measures. Schools are expanding drug education programs to raise awareness of fentanyl in counterfeit pills, while many communities are making naloxone (Narcan), an opioid overdose reversal drug, more readily available in schools and public spaces.

The gateway drug theory argues that starting with something seemingly harmless and socially accepted, like marijuana or alcohol, may open the door to harder drugs over time.

Teens who use e-cigarettes are more likely to start smoking traditional tobacco products, like cigarettes, cigars, or hookahs, within a short period. In a National Institute of Health study comparing ninth-grade students, 31% of those who had used e-cigarettes transitioned to combustible tobacco within the first six months, compared to only 8% of those who hadn’t used e-cigarettes.

Developed by Chinese pharmacist Hon Lik, the first e-cigarette was patented in 2003 with the intention of aiding smokers in quitting by replicating the act of smoking while minimizing exposure to tar and other harmful substances. Yes, vaping was promoted as a safer choice, attracting a new market of non-smokers drawn in by enticing flavors.

In 2014, NJOY — a vaporizer manufacturer accused of infringing on Juul’s patents — launched a campaign with catchy slogans like “Friends Don’t Let Friends Smoke”.They strategically placed ads in bars and nightclubs, embedding vaping into social settings to help normalize the behavior, making it seem like a trendy choice.

Ten years later, this narrative has been significantly challenged, as vaping has become the most prevalent form of nicotine use among teenagers in the U.S. as of 2024.

But deep down, maybe we’re looking at drug use all wrong. Instead of just thinking about the risks, it’s worth asking why so many young people are turning to drugs in the first place. What drives them to make that choice?

Nearly three-quarters (73%) of the 15,963 teenagers who participated in an online survey conducted by the National Addictions Vigilance Intervention and Prevention Program, about their motivations for drug and alcohol use from 2014 to 2022 reported that they used substances “to feel mellow, calm, or relaxed.” Additionally, 44% indicated they used drugs, such as marijuana, as sleep aids.

While drug use among teenagers is a growing concern, the primary challenges young people face might not be addiction, but rather anxiety, depression, and the crippling sense of hopelessness. It is possible that a parent’s overprotectiveness can sometimes misdirect focus towards the wrong problems, leading to a dangerous reliance on technology that fails to reveal the full picture.

Whether the threat is external or tied to self-exploration, this episode of Black Mirror demonstrates how parental fears can easily transform into controlling behaviors. It reflects real-life scenarios where teens, feeling trapped or misunderstood, may seek escape through drugs, sex, or even violence.

Parents, with their best intention, often believe they’re bringing home a protective shield for their children. However, instead the approach turns into a sword, cutting into their relationships and severing the bonds they’ve worked so hard to maintain. What they thought would keep them safe only deepened the divide, a poignant reminder that sometimes the tools meant to protect can backfire and be the ones that cause the most harm.

Join my YouTube community for insights on writing, the creative process, and the endurance needed to tackle big projects. Subscribe Now!

For more writing ideas and original stories, please sign up for my mailing list. You won’t receive emails from me often, but when you do, they’ll only include my proudest works.