Before we dive into the events of Joan Is Awful, let’s flash back to when this episode first aired: June 15, 2023.

In 2023, the tech industry faced a wave of major layoffs. Meta cut 10,000 employees and closed 5,000 open positions in March. Amazon followed, letting go of 9,000 workers that same month. Microsoft reduced its workforce by 10,000 employees in early 2023, while Google announced its own significant layoffs, contributing to a broader trend of instability in even the largest, most influential tech companies.

Netflix released Depp v. Heard in 2023. This three-part documentary captures the defamation trial between Johnny Depp and Amber Heard. The series explored the viral spectacle that surrounded it online, showing how social media, memes, and influencer commentary amplified every moment.

Meanwhile, incidents of deepfakes surged dramatically. In North America alone, AI-generated videos and audio clips increased tenfold in 2023 compared to the previous year, with a 1,740% spike in malicious use.

In early 2023, a video began circulating on YouTube and across social media that seemed to show Elon Musk in a CNBC interview. The Tesla CEO appeared calm and confident as he promoted a new cryptocurrency opportunity. It looked authentic enough to fool thousands. But the entire thing was fake.

That same year, the legal system began to catch up. An Australian man named Anthony Rotondo was charged with creating and distributing non-consensual deepfake images on a now-defunct website called Mr. Deepfakes. In 2025, he admitted to the offense and was fined $343,500.

Around the world, banks and cybersecurity experts raised alarms as AI manipulation began to breach biometric systems, leading to a new wave of financial fraud. What started as a novelty filter had become a weapon capable of stealing faces, voices, and identities.

All of this brings us to Black Mirror—Season 6, Episode 1: Joan Is Awful.

The episode explores the collision of personal privacy, corporate control, and digital replication. Joan’s life is copied, manipulated, and broadcast for entertainment before she even has a chance to tell her own story. The episode asks: How much of your identity is still yours when technology can exploit and monetize it? And is it even possible to reclaim control once the algorithm has taken over?

In this video, we’ll unpack the episode’s themes, explore real-world parallels, and ask whether these events have already happened—and if not, whether they are still plausible in our tech-driven, AI-permeated world.

Streaming Our Shame

In Joan is Awful, we follow Joan, an everyday woman whose life unravels after a streaming platform launches a show that dramatizes her every move. But the show’s algorithm doesn’t just imitate Joan’s life; it distorts it for entertainment. Her friends and coworkers watch the exaggerated version of her, and start believing it’s real.

The idea that media can reshape someone’s identity isn’t new—it’s been happening for years, only now with AI, it happens faster, cheaper, and more convincingly.

Reality television has long operated in this blurred zone between truth and manipulation. Contestants on shows like The Bachelor and Survivor have accused producers of using editing tricks to create villains and scandals that never actually happened.

One of the most striking examples comes from The Bachelor contestant Victoria Larson, who accused producers of using “Frankenbiting”, a technique of editing together pieces of dialogue from different times to make her appear like she was spreading rumors or being manipulative. She said the selective editing destroyed her reputation and derailed her career.

Then there’s the speed of public judgment in the age of social media. In 2020, when Amy Cooper—later dubbed “Central Park Karen”—called the police on a Black bird-watcher, the footage went viral within hours. She was fired, denounced, and doxxed almost overnight.

But Joan is Awful also goes deeper, showing how even our most intimate spaces are no longer private.

In 2020, hackers breached Vastaamo, a Finnish psychotherapy service, stealing hundreds of patient files—including therapy notes—and blackmailing both the company and individuals. Finnish authorities eventually caught the hacker, who was sentenced in 2024 for blackmail and unauthorized data breaches.

In this episode, Streamberry’s AI show thrives on a simple principle: outrage. They turn Joan’s humiliation into the audience’s entertainment. The more uncomfortable she becomes, the more viewers tune in. It’s not far from reality.

A 2025 study published in ProMarket found that toxic content drives higher engagement on social media platforms. When users were shielded from negative or hostile posts, they spent 9% less time per day on Facebook, resulting in fewer ads and interactions.

By 2025, over 52% of TikTok videos featured some form of AI generation—synthetic voices, avatars, or deepfake filters. These “AI slop” clips fill feeds with distorted versions of real people, transforming private lives into shareable, monetized outrage.

Joan is Awful magnifies a reality we already live in. Our online world thrives on manipulation—of emotion, of data, of identity—and we’ve signed the release form without even noticing.

Agreeing Away Your Identity

One of the episode’s most painful scenes comes when Joan meets with her lawyer, asking if there’s any legal way to stop the company from using her life as entertainment. But the lawyer points to the fine print—pages of complex legal language Joan had accepted without a second thought.

The moment is both absurd and shockingly real. How many times have you clicked “I agree” without reading a word?

In the real world, most of us do exactly what Joan did. A 2017 Deloitte survey conducted in the U.S. shows that over 90% of users accept terms and conditions without reading them. Platforms can then use that data for marketing, AI training, or even creative content—all perfectly legal because we “consented.”

The dangers of hidden clauses extend far beyond digital services. In 2023, Disneyland attempted to invoke a controversial contract clause to avoid liability for a tragic allergic reaction that led to a woman’s death at a Disney World restaurant in Florida. The company argued that her husband couldn’t sue for wrongful death because—years earlier—he had agreed to arbitration and legal waivers buried in the fine print of a free Disney+ trial.

Critics called the move outrageous, pointing out that Disney was trying to apply streaming service terms to a completely unrelated event. The case exposed how corporations can weaponize routine user agreements to sidestep accountability.

The episode also echoes recent events where real people’s stories have been taken and repackaged for profit.

Take Elizabeth Holmes, the disgraced founder of Theranos. Within months of her trial, her life was dramatized into The Dropout. The Hulu mini-series was produced in real time alongside Holmes’s ongoing trial. As new courtroom revelations surfaced, the writers revised the script. The result was a more layered, unsettling portrayal of Holmes and her business partner Sunny Balwani—a relationship far more complex and toxic than anyone initially imagined.

In Joan is Awful, the show’s AI doesn’t care about Joan’s truth, and in our world, algorithms aren’t so different. Every click, every “I agree,” and every trending headline feeds an ecosystem that rewards speed over accuracy and spectacle over empathy.

When consent becomes a view or a checkbox and stories become assets, the line between living your life and licensing it starts to blur. And by the time we realize what we’ve signed away, it might already be too late.

Facing the Deepfake

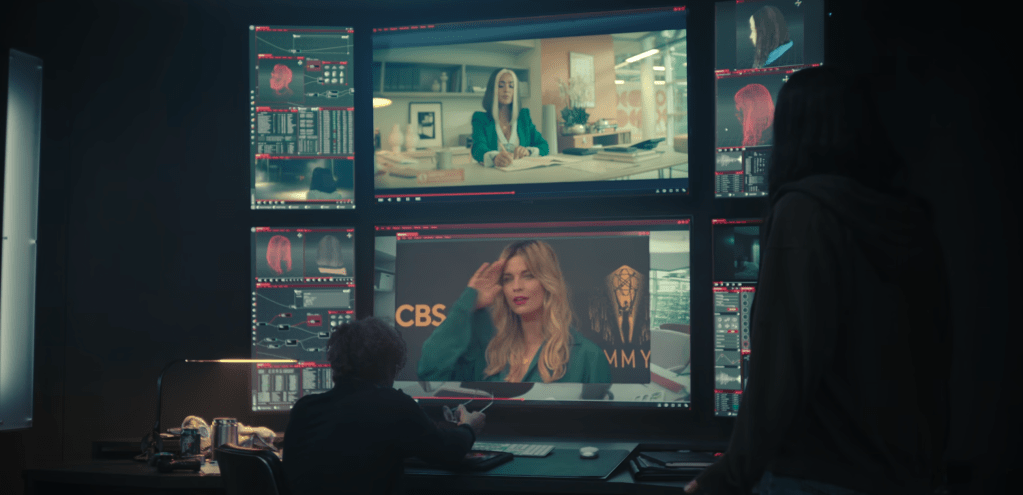

In Joan Is Awful, the twist isn’t just that Joan’s life is being dramatized; it’s that everyone’s life is. What begins as a surreal violation spirals into an infinite mirror. Salma Hayek plays Joan in the Streamberry series, but then Cate Blanchett plays Salma Hayek in the next layer.

The rise of AI and deepfake technology is reshaping how we understand identity and consent. Increasingly, people are discovering their faces, voices, or likenesses used in ads, films, or explicit content without permission.

In 2025, Brazilian police arrested four people for using deepfakes of celebrity Gisele Bündchen and others in fraudulent Instagram ads, scamming victims out of nearly $3.9 million USD.

Governments worldwide are beginning to respond. Denmark’s copyright amendment now treats personal likeness as intellectual property, allowing takedown requests and platform fines even posthumously. In the U.S., the 2025 TAKE IT DOWN Act criminalizes non-consensual AI-generated sexual imagery and impersonation.

In May 2025, Mr. Deepfakes, one of the world’s largest deepfake pornography websites, permanently shut down after a core service provider terminated operations. The platform had been online since 2018 and hosted more than 43,000 AI-generated sexual videos, viewed over 1.5 billion times. Roughly 95% of targets were celebrity women, but researchers identified hundreds of victims who were private individuals.

Despite these legal advances, a fundamental gray area remains. As AI becomes increasingly sophisticated, it is getting harder to tell whether content is drawn from a real person or entirely fabricated.

An example is Tilly Norwood, an AI-generated actress created by Xicoia. In September 2025, Norwood’s signing with a talent agency sparked major controversy in Hollywood.

Her lifelike digital persona was built using the performances of real actors—without their consent. The event marked a troubling shift. As producers continue to push AI-generated actors into mainstream projects.

Actress Whoopi Goldberg voiced her concern, saying, “The problem with this, in my humble opinion, is that you’re up against something that’s been generated with 5,000 other actors.”

“It’s a little bit of an unfair advantage,” she added. “But you know what? Bring it on. Because you can always tell them from us.”

In response to the backlash, Tilly’s creator Eline Van der Velden shared a statement:

“To those who have expressed anger over the creation of our AI character, Tilly Norwood: she is not a replacement for a human being, but a creative work – a piece of art.”

When Joan and Salma Hayek sneak into the Streamberry headquarters, they overhear Mona Javadi, the executive behind the series, explaining the operation. She reveals that every version of Joan Is Awful is generated simultaneously by a quantum computer, endlessly creating new versions of real people’s lives for entertainment. Each “Joan,” “Salma,” and “Cate” is a copy of a copy—an infinite simulation. And it’s not just Joan; the system runs on an entire catalog of ordinary people. Suddenly, the scale of this entertainment becomes clear—it’s not just wide, it’s deep, with endless iterations and consequences.

At the 2025 Runway AI Film Festival, the winning film Total Pixel Space exemplified how filmmakers are beginning to embrace these multiverse-like AI frameworks. Rather than following a single script, the AI engine dynamically generated visual and narrative elements across multiple variations of the same storyline, creating different viewer experiences each time.

AI and deepfake technologies are already capable of realistically replicating faces, voices, and mannerisms, and platforms collect vast amounts of personal data from our everyday lives. Add quantum computing, algorithmic storytelling, and the legal gray areas surrounding consent and likeness, and the episode’s vision of lives being rewritten for entertainment starts to feel less like fantasy.

Every post, every photo, every digital footprint feeds algorithms that could one day rewrite our lives—or maybe already are. Maybe we can slip the loop, maybe we’re already in it, and maybe the trick is simply staying aware that everything we do is already being watched, whether by the eyes of the audience or the eyes of the creators that is still seeking inspiration.

Join my YouTube community for insights on writing, the creative process, and the endurance needed to tackle big projects. Subscribe Now!

For more writing ideas and original stories, please sign up for my mailing list. You won’t receive emails from me often, but when you do, they’ll only include my proudest works.