Before we dive into the events of Smithereens, let’s flash back to when this episode first aired: June 5, 2019.

In 2019, guided meditation apps like Headspace and Calm surged in popularity. Tech giants like Google and Salesforce began integrating meditation into their wellness programs. By the end of the year, the top 10 meditation apps had pulled in nearly $195 million in revenue—a 52% increase from the year before.

That same year, Uber made headlines with one of the decade’s biggest IPOs, debuting at $45 a share and securing a valuation north of $80 billion. But the milestone was messy. Regulators, drivers, and safety advocates pushed back after a fatal 2018 crash in Tempe, Arizona, where one of the company’s self-driving cars struck and killed a pedestrian during testing.

Inside tech companies, the culture was shifting. While perks like catered meals and gym memberships remained, a wave of employee activism surged. Workers staged walkouts at Google and other firms, and in 2019, the illusion of the perfect tech workplace began to crack.

Meanwhile, 2019 set the stage for the global rollout of 5G, promising faster, smarter connectivity. But it also sparked geopolitical tensions, as the U.S. banned Chinese company Huawei from its networks, citing national security threats.

Over it all loomed a small circle of tech billionaires. In 2019, Jeff Bezos held the title of the richest man alive with a net worth of $131 billion. Bill Gates followed, hovering between $96 and $106 billion. Mark Zuckerberg’s wealth was estimated between $62 and $64 billion, while Elon Musk, still years away from topping the charts, sat at around $25 to $30 billion.

And that brings us to this episode of Black Mirror, Season 5, Episode 2: Smithereens.

This episode pulls us into the high-stakes negotiation between personal grief and corporate power, where a rideshare driver takes an intern hostage—not for ransom, but for answers.

What happens when the tools meant to connect us become the things that break us?

It forces us to consider: Do tech CEOs hold too much power, enough to override governments, manipulate systems, and play god?

And are we all just one buzz, one glance, one distracted moment away from irreversible change?

In this video, we’ll unpack the episode’s key themes and examine whether these events have happened in the real world—and if not, whether or not it is plausible. Let’s go!

Addicted by Design

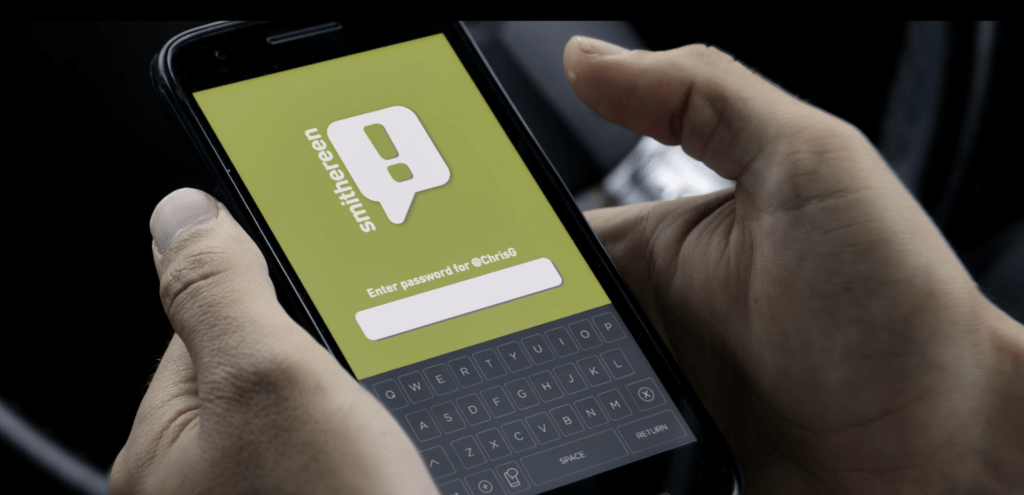

In Smithereens, we follow Chris, a man tormented by the loss of his fiancée, who died in a car crash caused by a single glance at his phone. The episode unfolds in a world flooded by noise: the pings of updates, the endless scroll, the constant itch to check in. And at the center of it all is Smithereen, a fictional social media giant clearly modeled after Twitter.

Like Twitter, Smithereen was built to connect. But as CEO Billy Bauer admits, “It was supposed to be different.” It speaks to how platforms born from good intentions become hijacked by business models that reward outrage, obsession, and engagement at all costs.

A 2024 study featured by TechPolicy Press followed 252 Twitter users in the U.S., gathering over 6,000 responses—and the findings were clear: the platform consistently made people feel worse, no matter their background or personality. By 2025, 65% of users aged 18 to 34 say they feel addicted to its real-time feeds and dopamine-fueled design.

Elon Musk’s $44 billion takeover of Twitter in 2022 was framed as a free speech mission. Musk gutted safety teams, reinstated banned accounts, and renamed the platform “X.” What was once a digital town square transformed into a volatile personal experiment.

This accelerated the emergence of alternatives. Bluesky, a decentralized platform created by former Twitter CEO Jack Dorsey, aims to avoid the mistakes of its predecessor. With over 35 million users as of mid-2025, it promises transparency and ethical design—but still faces the same existential challenge: can a social app grow without exploiting attention?

In 2025, whistleblower Sarah Wynn-Williams testified before the U.S. Senate that Meta—Facebook’s parent company— had systems capable of detecting when teens felt anxious or insecure, then targeted them with beauty and weight-loss ads at their most vulnerable moments. Meta knew the risks. They chose profit anyway.

Meanwhile, a brain imaging study in China’s Tianjin Normal University found that short video apps like TikTok activate the same brain regions linked to gambling. Infinite scroll. Viral loops. Micro-rewards. The science behind addiction is now product strategy.

To help users take control of their app use, Instagram, TikTok, and Facebook offer screen-time dashboards and limit-setting features. But despite these tools, most people aren’t logging off. The average user still spends more than 2 hours and 21 minutes a day on social media with Gen Z clocking in at nearly 4 hours. It appears that self-monitoring features alone aren’t enough to break the cycle of compulsive scrolling.

What about regulations?

A 2024 BBC Future article explores this question through the lens of New York’s SAFE Kids Act, set to take effect in 2025. This will require parental consent for algorithmic feeds, limit late-night notifications to minors, and tighten age verification. But experts warn: without a global, systemic shift, these measures are just patches on a sinking ship.

Of all the Black Mirror episodes, Smithereens may feel the most real—because it already is. These platforms don’t just consume our time—they consume our attention, our emotions, even our grief. Like Chris holding Jaden, the intern, at gunpoint, we’ve become hostages to the very systems that promised connection.

Billionaire God Mode

When the situation escalated in the episode, Billy Bauer activates God Mode, bypassing his own team to monitor the situation in real time and speak directly with Chris.

In doing so, he reveals the often hidden power tech CEOs wield behind the scenes, along with the heavy ethical burden that comes with it. It hints at the master key built into their creations and the control embedded deep within the design of modern technology.

No one seems to wield “God Mode” in the real world quite like Elon Musk—able to bend markets, sway public discourse, and even shape government policy with a single tweet or private meeting.

The reason is simple: Musk had built an empire.

In 2025, Tesla secured the largest U.S. State Department contract of the year: a $400 million deal for armored electric vehicles.

Additionally, through SpaceX’s satellite network Starlink, Musk played an outsized role in Ukraine’s war against Russia, enabling drone strikes, real-time battlefield intelligence, and communication under siege.

Starlink also provided emergency internet access to tens of thousands of users during blackouts in Iran and Israel, becoming an uncensored digital lifeline—one that only Musk could switch on or off.

But with that power comes scrutiny. Musk’s involvement in the Department of Government Efficiency—ironically dubbed “Doge”—was meant to streamline bureaucracy. Instead, it sowed dysfunction. Critics argue he treated government like another startup to be disrupted. Within months—after failing to deliver the promised $2 trillion in savings and amid mounting chaos—Donald Trump publicly distanced himself from Elon Musk and ultimately removed him from the post, temporarily ending the alliance between the world’s most powerful man and its richest.

It’s not just Musk. Other tech CEOs like Mark Zuckerberg have also shaped public discourse in quiet, powerful ways. In 2021, whistleblower Frances Haugen exposed Facebook’s secret “XCheck” system—a program that allowed approximately 6 million high-profile users to bypass the platform’s own rules. Celebrities and politicians—including Donald Trump—were able to post harmful content without facing the same moderation as regular users, a failure that ultimately contributed to the January 6 Capitol riots.

Amid the hostage standoff and the heavy hand of tech surveillance, one moment stands out: Chris begs Billy to help a grieving mother, Hayley. And Billy listens. He uses his “God Mode” to offer her closure by giving her access to her late daughter’s Persona account.

In Germany, a landmark case began in 2015 when the parents of a 15-year-old girl who died in a 2012 train accident sought access to her Facebook messages to determine whether her death was accidental or suicide. A lower court initially ruled in their favor, stating that digital data, like a diary, could be inherited. The case saw multiple appeals, but in 2018, Germany’s highest court issued a final ruling: the parents had the right to access their daughter’s Facebook account.

In response to growing legal battles and emotional appeals from grieving families, platforms like Meta, Apple, and Google have since introduced “Digital Legacy” policies. These allow users to designate someone to manage or access their data after death, acknowledging that our digital lives don’t simply disappear when we do.

In real life, “God Mode” tools exist at many tech companies. Facebook engineers have used internal dashboards to track misinformation in real time. Leaked documents from Twitter revealed an actual “God Mode” that allowed employees to tweet from any account. These systems are designed for testing or security—but they also represent concentrated power with little external oversight.

And so we scroll.

We scroll through curated feeds built by teams we’ll never meet and governed by CEOs who rarely answer to anyone. These platforms know what we watch, where we go, and how we feel. They don’t just reflect the world—we live in the one they’ve built.

And if someone holds the key to everything—who’s watching the one who holds the key?

Deadly Distractions

In Smithereens, Chris loses his fiancée to a single glance at his phone. A notification. An urge. A reminder that in a world wired for attention, even a moment of distraction can cost everything.

In 2024, distracted driving killed around 3,000 people in the U.S.—about eight lives lost every single day—and injured over 400,000 more.

Of these, cellphone use is a major factor: NHTSA data shows that cellphones were involved in about 12% of fatal distraction-affected crashes. This means that, in recent years, over 300 to 400 lives are lost annually in the U.S. specifically due to phone-related distracted driving accidents.

While drunk driving still causes more total deaths, texting while driving is now one of the most dangerous behaviors behind the wheel—raising the risk of a crash by 23 times.

In April 2014, Courtney Ann Sanford’s final Facebook post read: “The Happy song makes me so HAPPY!” Moments later, her car veered across the median and slammed into a truck. She died instantly. Investigators found she had been taking a selfie and updating her status while driving.

Around the world, laws are evolving to address the dangers of distracted driving. In the United States, most states have banned texting while driving—with 48 or 49 states, plus Washington D.C. and other territories, prohibiting text messaging for all drivers, and hands-free laws expanding to more jurisdictions.

In Europe, the UK issues £200 fines and six penalty points for distracted driving. Spain and Italy have fines starting around €200—and in Italy, proposed hikes could push that up to €1,697. The current highest fine is in Queensland, Australia, where drivers caught texting or scrolling can face fines up to $1,250.

To combat phone use behind the wheel, law enforcement in Australia and Europe now deploys AI-powered cameras that scan drivers in real time. Mounted on roadsides or mobile units, these high-res systems catch everything from texting to video calls. If AI flags a violation, a human officer reviews it before issuing a fine.

As for the role of tech companies? While features like Apple’s “Do Not Disturb While Driving” mode exist, they’re voluntary. No country has yet held a tech firm legally accountable for designing apps that lure users into dangerous distractions. Public pressure is building, but regulation lags behind reality.

In Smithereens, the crash wasn’t just a twist of fate—it was the inevitable outcome of a system designed to capture and hold our attention: algorithms crafted to hijack our minds, interfaces engineered for compulsion, and a culture that prizes being always-on, always-engaged, always reachable. And in the end, it’s not just Chris’s life that’s blown to smithereens—it’s our fragile illusion of control, shattered by the very tech we once believed we could master.

We tap, scroll, and swipe—chasing tiny bursts of dopamine, one notification at a time. Chris’s story may be fiction, but the danger it exposes is all too real. It’s in the car beside you. It’s in your own hands as you fall asleep. We can’t even go to the bathroom without it anymore. No hostage situation is needed to reveal the cost—we’re living it every day.

Join my YouTube community for insights on writing, the creative process, and the endurance needed to tackle big projects. Subscribe Now!

For more writing ideas and original stories, please sign up for my mailing list. You won’t receive emails from me often, but when you do, they’ll only include my proudest works.