Before we dive into Loch Henry, let’s flash back to when this episode first aired: June 15, 2023.

In 2023, policing and law enforcement were under intense scrutiny across the United States, and small towns in particular made headlines in ways that echo the tensions explored in Loch Henry. In January, Tyre Nichols — a 29-year-old Black man — died after being beaten by five Memphis police officers from “Scorpion”, the city’s specialized crime-suppression unit.

That same month, a violent and racially charged incident unfolded in Rankin County, Mississippi, when six white law enforcement officers entered a home without a warrant and tortured two Black men.

The officers, calling themselves the “Goon Squad,” were later convicted and sentenced to prison terms ranging from 10 to 40 years.

Meanwhile, across the U.S., countless small towns have been forced to downsize—or even disband. In Minnesota, the town of Goodhue made headlines in 2023 when its entire police force resigned over low pay and staffing challenges, leaving the community entirely in the hands of the county sheriff.

In 2023, Netflix released Murdaugh Murders: A Southern Scandal, a documentary that peeled back the façade of one of South Carolina’s most influential families. What started as a story about a tragic boating accident unraveled into a web of corruption, financial fraud, and generational violence orchestrated by attorney Alex Murdaugh. The series revealed how a powerful patriarch could operate unchecked for decades, because an entire community learned to look away.

All of this brings us to Black Mirror—Season 6, Episode 2: Loch Henry. The episode dives into personal trauma, systemic injustice, and buried community secrets. Davis returns to his small hometown, only to uncover a dark history of violence and corruption, including his own father’s hidden crimes.

In this video, we’ll break down the episode’s themes, explore real-world parallels, and ask whether these events have already happened—and if not, whether it is still plausible.

1. Still Recording

In Loch Henry, Davis brings his girlfriend Pia home to Scotland, expecting nothing more than a quiet visit and a small documentary project. But the moment they start digging into a local cold case, the more they uncover a truth no one ever intended to see.

Digitizing his mom’s forgotten videotapes seemed like an innocent act, a way to preserve fading memories, until those memories revealed truths Davis had spent a lifetime unknowingly living beside.

What makes Loch Henry so unsettling is how familiar that unraveling feels. In real life, technology can expose the truth just as suddenly and brutally, often through the very tools we use to record memories. Old camcorders, security footage, and archived videos don’t just preserve the past; they can capture crimes, lies, and hidden actions that were never meant to be seen.

Take police body cameras. They were introduced as tools of transparency, but instead they’ve documented some of the most devastating failures in modern policing.

On March 31, 2021, 22‑year-old Anthony Alvarez was fatally shot by a police officer in Chicago. Body‑cam footage showed Alvarez being shot in the back while fleeing, despite the police’s claim that he posed a threat.

When Pia first meets Davis’s mother, she asks if Pia grew up in America, noting her accent. What seems like an innocent question lands as a probing first impression, part of a series of subtle microaggressions that highlight her outsider status. The tension is heightened by the knowledge that Davis’s father was a police officer. These everyday slights reflect a harsher reality: around the world, visible minorities are often scrutinized and disproportionately targeted by law enforcement.

In late 2025, the Edmonton Police Service launched a pilot program equipping body-worn cameras with real-time facial recognition, scanning a “watch list” of more than 7,000 people. Privacy experts immediately raised red flags. Facial-recognition tech is still wildly unreliable, especially on marginalized groups, and rolling it out without broad public consultation risks turning entire communities into living databases.

A 2025 academic study by University of Philadelphia showed that the blurrier the footage the more facial recognition breaks down. The systems disproportionately misidentify Black people and women, creating a feedback loop of digital injustice.

Even the U.K. Home Office had to admit that the facial-recognition tools used by police generated significantly higher false positives for Black and Asian individuals—sometimes hundreds of times higher than for white subjects.

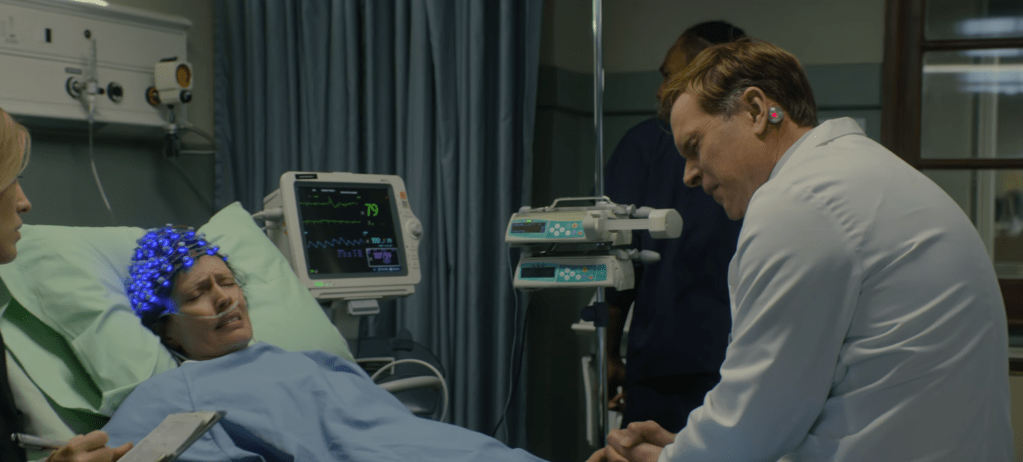

While converting old tapes to digital, Pia discovers footage that reveals Davis’s mother as an active participant in the murders.

Recording adds another layer of power to the abuse. For some perpetrators, the camera is a tool of control. Capturing the act makes it permanent, something they can own, revisit, and dominate long after the moment itself has passed.

In 2025, VICE reported on a video known online as “The Vietnamese Butcher”—a piece of footage circulated as entertainment despite documenting a real killing. Shot from multiple angles and edited like a production.

Online investigators later linked the apparent victim to a Vietnamese man, who had previously discussed fantasies about being killed and sought out others willing to do so. At the same time, clips and still images from the video were reportedly sold in bundled packs on dark-web forums and Telegram channels.

Recording has always been sold as protection, a way to preserve facts. But from the moment video existed, it also created new problems: new forms of evidence to interpret and new arguments over who controls the narrative. History has shown how cameras can document injustice—or turn violence into spectacle, from sensationalized true crime to the disturbing legacy of snuff imagery.

2. The Code of Silence

Once authorities uncovered Ian Adair’s torture chamber in Loch Henry, the quiet village was thrust into the national spotlight. But beneath the spectacle lies a familiar story: small communities often close ranks, protecting their own even in the face of terrible crimes.

Real-world parallels make this even more unsettling. Take Thunder Bay. For years, this Canadian city faced national scrutiny over the unexplained deaths of Indigenous youth, many of whom were dismissed as accidents or misadventures.

It wasn’t until external pressure mounted that a deeper investigation revealed systemic failures. Yet the truth only emerged fully when journalists and the Thunder Bay podcast revisited the cases, re-examining timelines and bringing long-ignored inconsistencies to light.

Small towns often rely on overlapping social networks—police, officials, and longtime families—which makes whistleblowing socially costly. This is starkly illustrated by Skidmore, Missouri, a town of fewer than 300. In 1981, local bully Ken Rex McElroy was shot in broad daylight on the main street in front of dozens of townspeople. McElroy had terrorized residents for years and repeatedly evaded legal consequences. When he was finally killed, no one identified the shooters. The town’s near-unanimous silence was a deliberate, community-wide decision to shield those responsible.

Loch Henry captures this same dynamic: towns, families, and neighbors often band together to hide uncomfortable truths. And just like in Thunder Bay or Skidmore, it’s only when outsiders dig through old footage and forgotten records that the cracks in the community’s façade are exposed.

3. The Documentary Effect

In Loch Henry, the act of making a documentary rips open old wounds. Davis and Pia set out to film something they assume will barely get traction. But once they pitch the idea to their production contacts and unexpectedly secure funding, the project grows teeth. With real backing behind them, they push deeper into the town’s past.

In the real world, some of the biggest shifts in criminal justice have come from courageous filmmakers who were supposed to be observers — yet became participants.

In 2015, Netflix’s Making a Murderer thrust a salvage yard owner Steven Avery—convicted of the murder of photographer Teresa Halbach—into the global spotlight. The series re-examined the crime itself alongside allegations of mishandled evidence, coercive interrogations, and the institutional forces that shaped his conviction.

The ground-breaking podcast Serial did something similar for Adnan Syed’s case in 2014, drawing millions into a meticulous re-examination of timelines, phone records, and investigative shortcuts—pressure that eventually led to the overturning of his conviction after more than two decades in prison.

Sometimes, the act of documentation itself becomes the turning point. The Jinx, released in 2015, began as a documentary profile of Robert Durst—a wealthy New York real estate heir long suspected in multiple murders but never successfully prosecuted. During post-production, filmmakers uncovered a chilling moment recorded after an interview, when Durst, still wearing a live microphone, muttered to himself: “Killed them all, of course.” That accidental recording became pivotal evidence, helping reopen the case and leading to Durst’s arrest and eventual conviction.

Modern documentaries often succeed where police files have gone cold. Digitizing old tapes, enhancing degraded footage, re-analyzing audio, and applying new forensic tools can expose details investigators once missed.

Series like The Staircase, The Keepers, and Don’t F**k With Cats show how returning to old evidence can fundamentally change our understanding of a crime.

Taken together, these cases underline what Loch Henry captures so well: cameras, recordings, and storytelling don’t simply preserve the past—they dig it back up, pulling buried truths, forgotten evidence, and long-suppressed crimes into the present.

The more we unearth old recordings and forgotten technology, the more the cracks beneath the surface appear. Media can bring accountability, but it also turns private trauma into public reckoning. That tension is what makes the episode feel like a warning, because nothing truly stays buried once someone presses record.

Join my YouTube community for insights on writing, the creative process, and the endurance needed to tackle big projects. Subscribe Now!

For more writing ideas and original stories, please sign up for my mailing list. You won’t receive emails from me often, but when you do, they’ll only include my proudest works.